PixelMon: Deep Learning Image Generation Framework

PixelMon is a modular PyTorch framework for image generation using Variational Autoencoders and Generative Adversarial Networks. Designed for rapid experimentation and extensibility, it enables researchers and developers to explore generative models across diverse visual domains.

Model Architectures & Technical Approach

PixelMon implements two complementary generative approaches: Variational Autoencoders (VAEs) for learning compressed representations and Deep Convolutional GANs (DCGANs) for high-quality image synthesis. Both models are built with modular PyTorch components for maximum flexibility and extensibility.

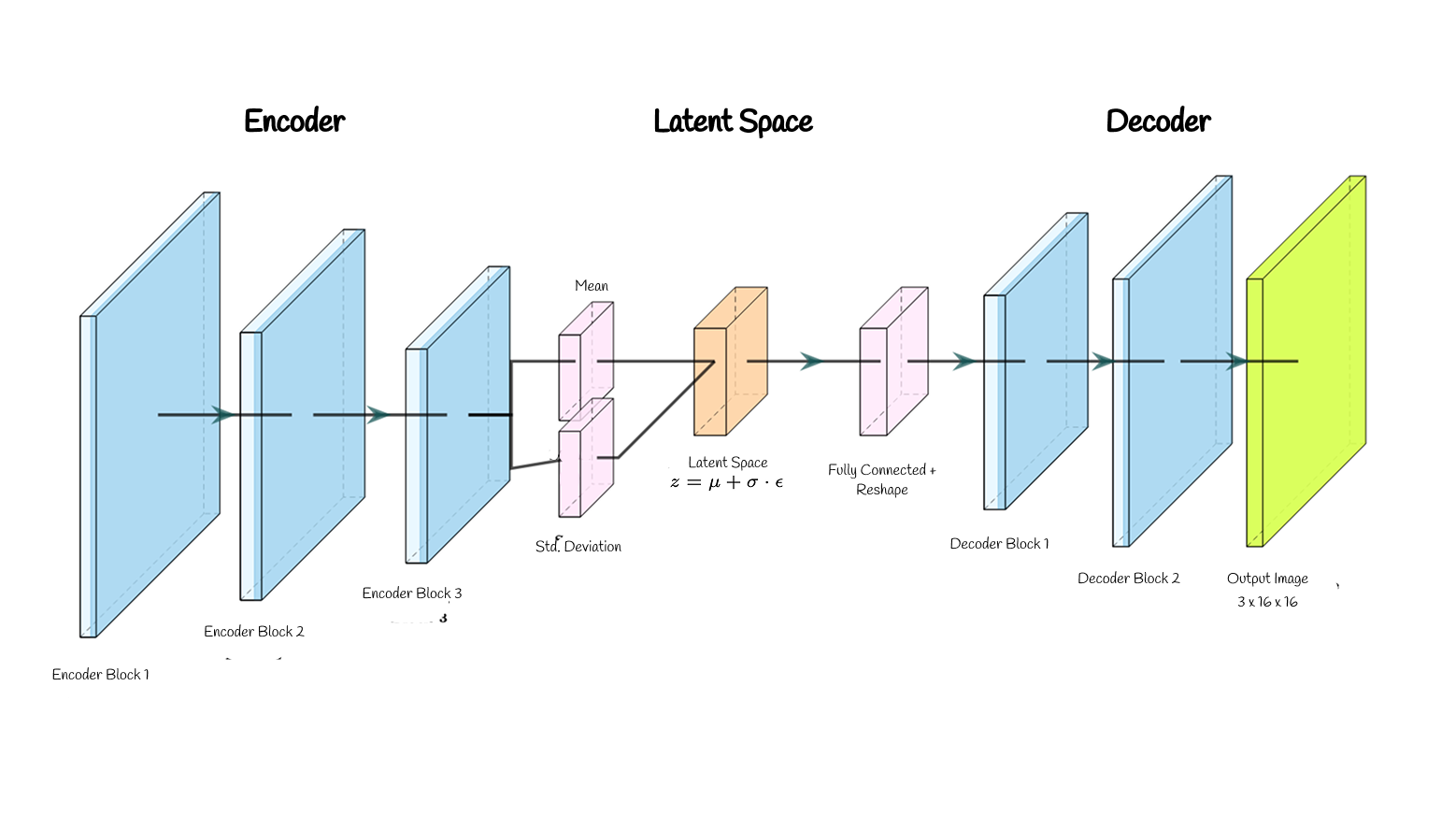

VAE Architecture

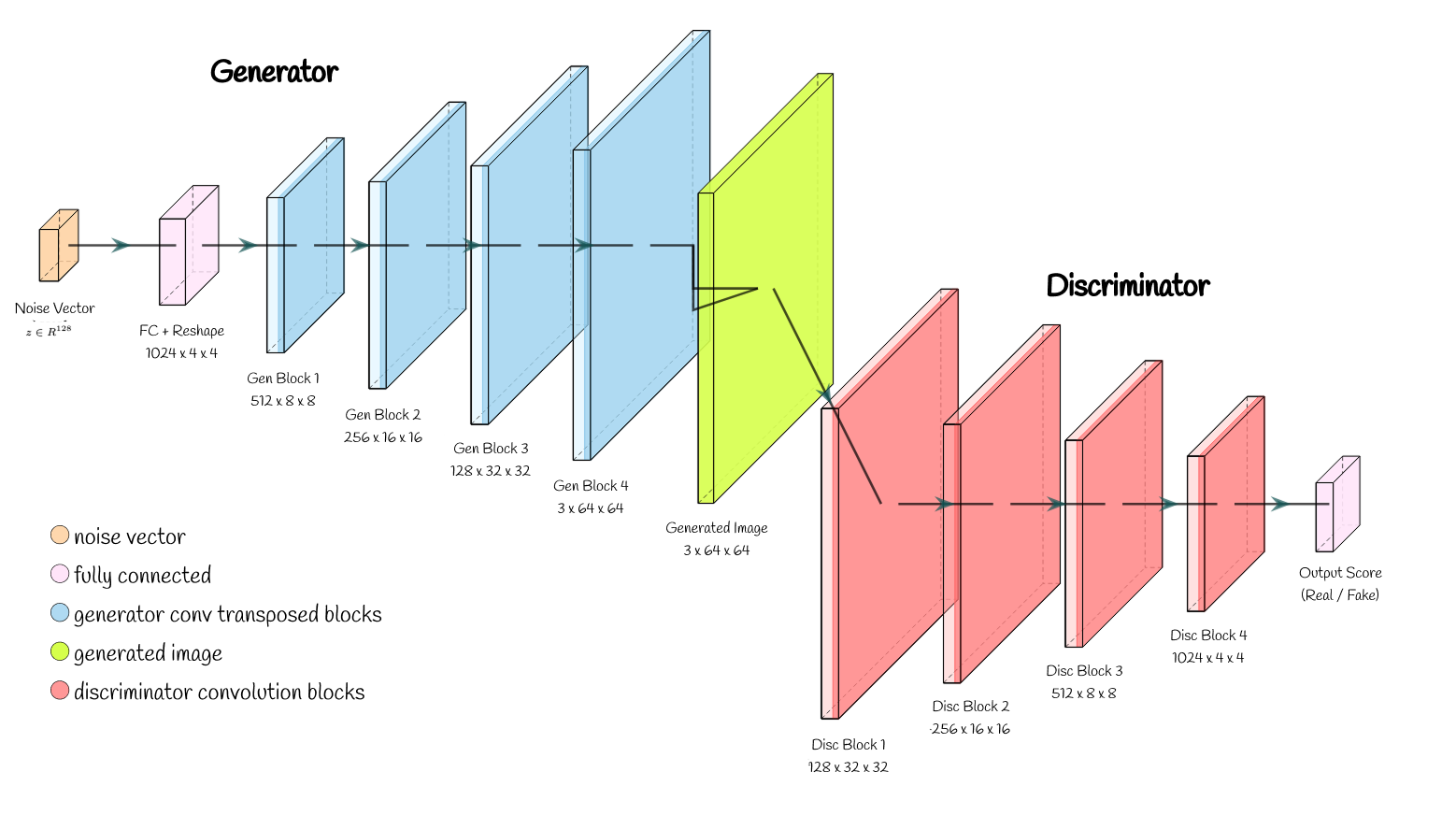

DCGAN Architecture

VAE Technical Details

Architecture Components

- • Encoder: 3 convolutional blocks with BatchNorm and LeakyReLU

- • Latent space: μ and σ outputs for reparameterization trick

- • Decoder: ConvTranspose2d layers for image reconstruction

- • Loss: Reconstruction + KL divergence

Technical Features

- • Configurable hidden dimensions: [32, 64, 128]

- • Latent dimension: 16 for efficient compression

- • Input/Output: 3×16×16 RGB images

- • Gaussian latent space for smooth interpolation

DCGAN Technical Details

Generator Network

- • Input: Random noise vector (latent_dim=100)

- • Architecture: ConvTranspose2d upsampling layers

- • Normalization: BatchNorm2d for stable training

- • Activation: ReLU → Tanh output

Discriminator Network

- • Input: Real or generated images

- • Architecture: Conv2d feature extraction

- • Activation: LeakyReLU for gradient flow

- • Output: Binary classification (real/fake)

Implementation Philosophy

Modularity

Each component—from dataset loaders to model architectures to training scripts—is designed as an independent, reusable module that inherits from PyTorch base classes for seamless integration.

Experimentation

Framework enables rapid switching between models and datasets with minimal code changes, supporting iterative research and development workflows.

Reproducibility

Consistent random seeds, parameter tracking, and standardized training loops ensure reliable experimental comparisons and results validation.

Experiments & Datasets

PixelMon was evaluated on two primary datasets, each chosen to test different aspects of generative modeling: low-resolution pixel art for rapid prototyping and anime faces for complex, high-variance generation. These datasets highlight the framework's versatility and robustness across diverse visual domains.

Pixel Art Dataset

89,000 images at 16×16 RGB resolution from diverse pixel art styles and games. Chosen for its clear visual patterns, manageable computational requirements, and suitability for rapid experimentation and architecture validation.

Dataset Characteristics

- • Diversity: Multiple art styles from classic arcade games to modern indie titles

- • Consistency: Uniform 16×16 resolution with clear, distinct visual patterns

- • Complexity: Balanced between simplicity for rapid training and richness for meaningful generation

- • Training Speed: Small image size enables fast iteration cycles

Anime Faces Dataset

63,632 images of anime character faces, providing complex facial features, diverse art styles, and challenging generation targets. Used to validate model performance on higher-complexity visual patterns and scalability to realistic domains.

Dataset Characteristics

- • Complexity: Rich facial features, expressions, and artistic variations

- • Variance: Wide range of character designs, hair colors, and facial structures

- • Challenge: Tests model's ability to capture fine details and avoid mode collapse

- • Realism: Bridges gap between synthetic pixel art and natural image domains

Dataset Infrastructure & Extensibility

Extensible Framework: Additional datasets including Pokemon (900 images), Landscapes (12,000 images), and MNIST are supported for future experiments and comparative studies.

PyTorch Integration: All data loaders inherit from PyTorch Dataset class with automatic preprocessing, normalization, and tensor conversion pipelines.

Modular Design: Each dataset handler can be imported independently, enabling custom experimentation workflows and easy integration with external datasets.

Scalability Testing: Framework architecture validated across resolutions from 16×16 to 224×224, demonstrating adaptability to diverse image sizes and domains.

Results

PixelMon achieved strong results across both datasets, demonstrating the effectiveness of its modular design and the power of modern generative models. Below are representative samples and key outcomes for each experiment.

VAE: Pixel Art

- • Clear latent space organization and smooth interpolation

- • Fast convergence and stable training

- • Meaningful reconstruction of diverse art styles

GAN: Pixel Art

- • Coherent pixel art styles and character features

- • High-quality generation with minimal overfitting

- • Rapid training convergence in just 10 epochs

VAE: Anime Faces

- • Successful encoding and reconstruction of facial features

- • Demonstrated scalability to complex domains

- • Preserved artistic style consistency

GAN: Anime Faces

- • High-quality face generation with style consistency

- • Robust performance on high-variance data

- • Sharp, detailed facial features and expressions

Key Achievements

- Modular Framework: Enabled rapid cross-dataset and cross-model experimentation

- Stable Training: Consistent convergence and reproducibility across runs

- Quality Generation: Maintained visual coherence and domain-specific characteristics

- Extensibility: Easy to add new datasets, models, and training strategies

- Performance: Achieved strong results with minimal hyperparameter tuning

- Documentation: Comprehensive code documentation and usage examples

Technologies & Tools

Installation & Usage

Python API Example

Technical Documentation & Blog

Medium Blog Post: "Image Generation: VAEs and GANs"

I documented the complete development process, technical challenges, and experimental results in a comprehensive blog post. The article covers the mathematical foundations of both VAEs and GANs, implementation details, and lessons learned from training on diverse datasets.

Key Technical Insights

- • VAE Latent Space: Lower-dimensional representations effectively capture essential image features across different domains

- • GAN Training: Careful balance between generator and discriminator learning rates prevents mode collapse

- • Dataset Scaling: Framework performance validated across resolutions from 16×16 to higher complexity domains

- • Framework Design: Modular architecture enables rapid experimentation across different model types and datasets

Development Learnings

- • Code Organization: Proper separation of concerns between data loading, model architecture, and training logic

- • Reproducibility: Consistent random seeds and parameter tracking for reliable experimental comparisons

- • GPU Optimization: Efficient batch processing and memory management for large-scale training

- • Library Design: PyTorch-compatible interfaces enable easy integration with existing ML workflows

Further Reading & Wrap-up

PixelMon is open-source and documented in detail. For a deeper dive into the technical challenges, lessons learned, and implementation details, check out the blog post and code repository below. The framework continues to be a valuable foundation for exploring generative models and serves as a template for building modular ML research tools.